Credit Card Optical Character Recognition with OpenCV + Tesseract

Link: https://github.com/philippe-heitzmann/Credit_Card_Reader_OpenCV_Tesseract

Introduction

Accurately extracting a card’s 16-digit number (PAN) and cardholder name from images is foundational for building scalable card databases, improving fraud controls, and enabling smoother checkout. The goal here is a ≤ 0.5s per image pipeline using OpenCV and Pytesseract.

Because large, public datasets with both PAN and name are scarce, we tested on a 23-image set collected from Google Images and hand-annotated. On this set, the pipeline reached 48% recall on the 16-digit number and 65% recall on the cardholder name. (Full dataset and code are in the repo.)

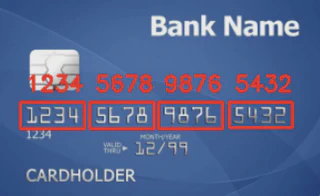

Figure 1. Sample dummy credit card images from the dataset

Methodology

We test two out-of-the-box components:

- Digits (PAN) via OpenCV template matching

- Name text via Google Pytesseract

i) Digit recognition

Template matching creates a reference image for each digit (0–9) and slides it across candidate regions, scoring similarity—analogous to a fixed kernel in a CNN.

Figure 2. Sample base credit card image

We first build ten digit templates from an OCR reference sheet by finding contours and cropping each digit ROI into a dictionary.

Figure 3. OCR reference used to build digit templates

To isolate candidate digit regions on the card:

Apply a tophat transform to highlight light digits against darker backgrounds.

Figure 4.

Run a Sobel operator to emphasize edges.

Convert to binary with Otsu thresholding.

Figure 5.

Code: build digit template map

import cv2

import imutils

import numpy as np

from imutils import contours

def read_ocr(ocr_path):

ref = cv2.imread(ocr_path)

ref = cv2.cvtColor(ref, cv2.COLOR_BGR2GRAY)

ref = cv2.threshold(ref, 10, 255, cv2.THRESH_BINARY_INV)[1]

refCnts = cv2.findContours(ref.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

refCnts = imutils.grab_contours(refCnts)

refCnts = contours.sort_contours(refCnts, method="left-to-right")[0]

return ref, refCnts

def get_digits(ref, refCnts):

digits = {}

for (i, c) in enumerate(refCnts):

(x, y, w, h) = cv2.boundingRect(c)

roi = ref[y:y + h, x:x + w]

roi = cv2.resize(roi, (57, 88))

digits[i] = roi

return digits

ocr_path = 'ocr_a_reference.png'

ref, refCnts = read_ocr(ocr_path)

digits = get_digits(ref, refCnts)

Once this Otsu-binarization applied to our image the next step in this process is to extract the contours of light regions in our image using OpenCV’s findContours() and imutils’ grab_contours() functions. We then computer rectangular bounding boxes encapsulating each contour using OpenCV’s boundingRect() and iteratively check the y-axis values of each to exclude any contour bounding boxes not falling in the 85-145 pixel height range given this height range is empirically observed to include all 16-digit credit in our credit card images out of a standardized maximum 190 pixel credit card image height. Contour bounding boxes meeting this condition are then further filtered in order to exclude any boxes with aspect ratios (width / height) falling outside the [2.5, 4.0] range given each of our four 4-digit credit card groupings making up our 16-digit number is further observed to exist in this aspect ratio range. We finally filter this resulting set to ensure that the bounding rectangles of each contour do not fall outside the [40,55] and [10,20] pixel ranges as these ranges are similarly observed to contain all 4-digit bounding boxes in our dataset. A final view of the four extracted bounding boxes for our image is shown in Figure 6.

Figure 6. Extracted 4-digit bounding boxes sample view

Once these 4-digit bounding box regions extracted we then iteratively score how closely each pixel’s pixel neighborhood in our extracted 4-digit sub-image matches our 0-9 digit templates extracted from Figure 3 using the OpenCV’s matchTemplate() function. The maximum score value and location of the corresponding pixel are then compiled using OpenCV’s minMaxLoc() function, allowing us to compute the precise location where a digit match was found in our card image.

# loop over the digit contours

for c in digitCnts:

# compute the bounding box of the individual digit, extract

# the digit, and resize it to have the same fixed size as

# the reference OCR-A images

(x, y, w, h) = cv2.boundingRect(c)

roi = group[y:y + h, x:x + w]

roi = cv2.resize(roi, (57, 88))

# initialize a list of template matching scores

scores = []

# loop over the reference digit name and digit ROI

for (digit, digitROI) in digits.items():

# apply correlation-based template matching, take the

# score, and update the scores list

result = cv2.matchTemplate(roi, digitROI, cv2.TM_CCOEFF)

(_, score, _, _) = cv2.minMaxLoc(result)

scores.append(score)

The final result of our digit template matching can be seen below, with each of our sixteen card number digits correctly identified by OpenCV’s template matching functionality:

Figure 7. Final digit recognition output for sample image

ii) Cardholder Character Text recognition

In order to detect cardholder character text in our image we similarly select the portion of each image falling between 150 and 190 pixels and height and 0 and 15 and 200 pixels in width in order to capture the bottom left of the credit card image and apply an identical binary Otsu thresholding transformation to our grayscale image in order to make more salient our white cardholder characters against the darker credit card background. The resulting transformed sub-image is shown below in Figure 8.

Figure 8. Transformed cardholder image

Each selected sub-image is then iteratively passed through the below get_chars() function outputting the detected text in the image using the Google Pytesseract library’s image_to_string() function as shown below. Pytesseract’s underlying OCR function works by passing our image through a pre-trained neural net-based OCR engine and outputs the detected text in the image, and in our case outputs the string ‘CARDHOLDER,’ for this passed image as showin in Figure 9 below.

def get_chars(img, show_image = False, **kwargs):

if isinstance(img, str):

img = cv2.imread(img)

if show_image:

plt.imshow(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

plt.title('Test Image'); plt.show()

image = Image.fromarray(img)

text = tess.image_to_string(image, **kwargs)

print("PyTesseract Detected the following text: ", text)

return text

Figure 9. Detected cardholder text output

Lastly in order to remove any extraneous punctuation that Pytesseract may erroneously detect in our image as the above this text is passed through the below function returning the first line of an uppercase string with all punctuation removed using Python’s regex module. Our final outputted text of ‘CARDHOLDER’ therefore matches this ground truth and leads to a correct text prediction.

def process_str(string):

string = string.upper()

string = re.sub(r'[^\w\s]','',string)

string = string.splitlines()

if len(string) == 0:

return string

return string[0]

Full Dataset Results

Applying the above pipeline across our full 23-image dataset produces 48% and 65% recall in correctly identifying digits and cardholder text in these images. In the case of text character recognition, as the minimum pixel height parameter used to select our cardholder sub-image was shown to have a relatively significant impact on model performance given non-insignificant variance observed between locations of cardholder names in our images, additional experiments exhaustively searching for optimal value over the [140,160] pixel range further revealed 150 pixels as the optimal minimum height to use in identifying cardholder locations.

Additional areas of investigation to further improve the performance of our credit card character recognition system would be to train our own Optical Character Recognition deep learning model fitted on publicly available datasets such as the MNIST and DDI-100 datasets in the case of digit and text recognition respectively in order to produce models capable of improving on this 50-65% recall performance benchmark.

Thanks for reading!